Floating-point becomes more and more common in today’s automotive industry. Since development is often done model-based using tools such as Simulink and dSPACE TargetLink, the model serves as an additional abstraction layer and therefore is an additional stage in the development and testing process. In comparison to fixed-point, floating-point comes up with several advantages. The biggest advantage, from most developers’ point of view, is to get rid of the scaling task. There is also a better detection of infinite and NaN (not a number), represented by special values. Beyond that, it seems that the precision increases and almost enables to represent the physical behavior (let’s see if that’s true). Finally, the range of representable values is much larger (which also comes with a downside).

So, looking at all the advantages, why not switch everything to floating-point?

Consider the following characteristics, before you decide to use floating-point:

Accuracy

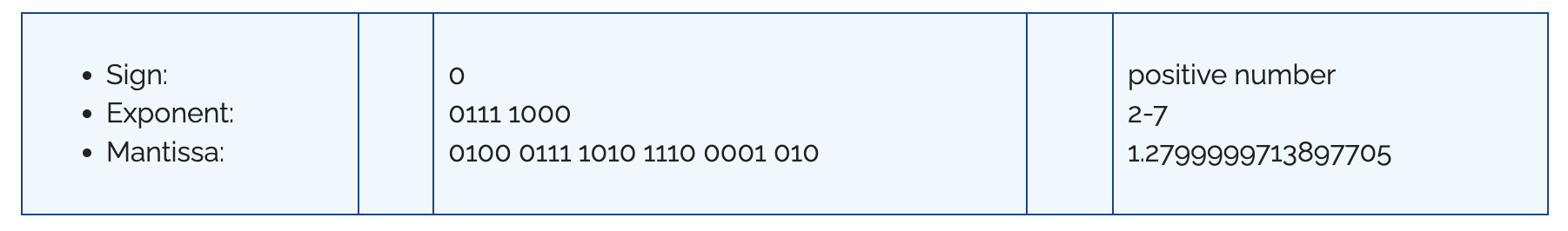

Let’s say there is an unsigned fixed-point integer with a scaling (=resolution) of 0.01. Based on this assumption, the calculation 0.01 + 0.01 will naturally result in exactly 0.02. Assigning the same calculation using floating-point (single precision), 0.01 + 0.01 will result in 0.0199999995529651641845703125. It’s close to 0.02 but not the exact value. If we have a look at the value 0.01, we can see that it is neither represented in an exact way. This becomes clear if we look how the value 0.01 is really represented in floating-point. A floating-point value is made up of 3 elements; 1 bit for the sign, 8 bits for the exponent and 23 bits for the mantissa totaling 32 bits. To represent a certain number, the mantissa represents a certain value with the exponent. In the case of 0.01, it looks like this:

If we calculate 1.2799999713897705 * 2-7 it results in 0.00999999977648258209228515625, the closest value in float to 0.01. This relative error due to rounding, is called the machine epsilon.

The first conclusion is, if you need an exact representation of values for things like loop counters or checking values for equality, floating-point is not the right choice.

Whereas half of the representable values of a float variable range between -1 and 1, accuracy decreases for larger values. With a float (single precision), the number 16.777.217 cannot be represented and depending on the rounding mode of the compiler, instead, this will lead to 16.777.216 or 16.777.218. For larger numbers, the gap between each representable value is even larger.

To summarize, floating-point variables do not bring more precision compared to fixed point. However, they bring a precision which dynamically changes across the value range; small numbers have high precision, large numbers have less precision. If your variables stay inside a clearly defined value range, similar (or even better) precision is possible with a constant scaling in fixed-point.

Comparison

Being aware of the discoveries in the previous section, there is also an effect on comparisons. There are different situations, where comparisons are done.

- Compare a variable and a constant value

- Loop counter

- Switch-Case operations

In these use cases, an exact comparison is needed, otherwise, this might lead to unintended behavior. In other use cases like greater/less comparisons, the floating-point precision can be suitable. However, if an exact and transparent behavior is intended, the inaccuracy might lead to a smaller or greater value than expected as a result of a calculation and therefore the wrong decision is made. For these use cases, the usage of fixed-point is the first choice.

Influence of the compiler

Using floating-point, the compiler has a much higher impact on the behavior of the code compared to fixed-point. This becomes more important for model-based development, where usually three stages of implementation are taken into account. These are model-in-the-loop (MIL), software-in-the-loop (SIL) and processor-in-the-loop (PIL, target object code). MIL usually represents the values with double precision, while most of the target processors in embedded software are based on 32-bit, so the SIL implementation is typically done in single precision. Based on the data type used, this might lead to different behavior in certain situations on MIL and SIL/PIL. In addition, there are three main influence factors that you should be aware of:

Precision

Let’s assume the following code:

double v = 1 x 10308;

double x = (v * v) / v;

The expected result of the second line of code is +∞. Even though this might be correct, sometimes the result can be x = v, if the processor is able to internally calculate with 80-bit precision. This can have an effect on development and testing on different implementation levels.

Optimization

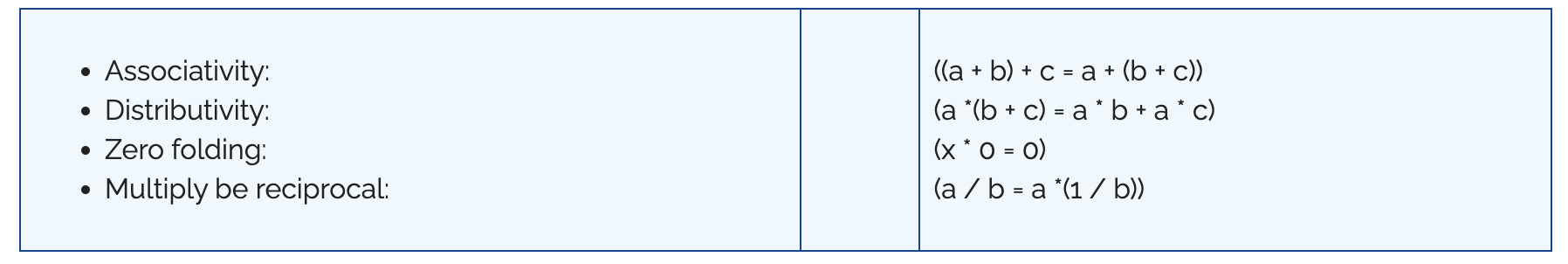

Algebraic laws do not hold in general in finite precision arithmetic like

Although many compilers give the opportunity to control compiler optimizations, such value-changing compiler optimizations can be enabled by default to improve efficiency and/or reduce size like for the Intel® Compiler.

Rounding

If the number of available digits is not sufficient to represent a real number precisely, rounding is used to represent a close value

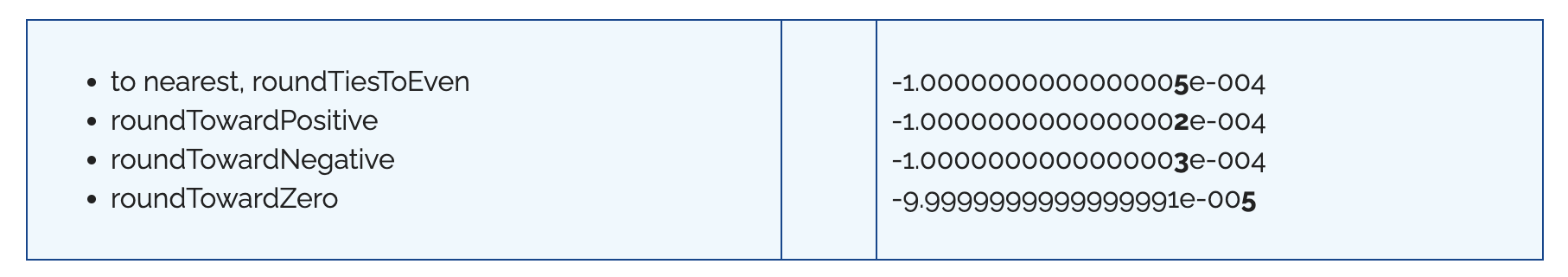

double a = 0.1;

double x = (a * -a) * (a * a);

The result for x in the second line of code depends on the used rounding mode:

This means that depending on the compiler settings and the capabilities of the underlying CPU, the results of calculations might be different between different compilers and CPUs. This can even lead to slightly different code coverage results between SIL and PIL.

Conclusion

To answer the question of why not switching everything to floating-point, there is a wide range of use cases, that will improve and get easier by using floating-point variables. However, there are certain use cases where using floating-point might lead to unintended and untransparent behavior. Furthermore, on different implementation levels like MIL, SIL and PIL the results of floating-point arithmetic might be different due to different compiler settings for precision, optimization and rounding. This makes testing of the target code (PIL) much more important than in fixed-point arithmetic. To deal with these new challenges and to stay ISO 26262 compliant, the test process should consider a structural Back-to-Back test besides the Requirements-based Testing. This should be done between the reference implementation (usually MIL) and the target code (PIL), if available or SIL otherwise.